PostgreSQL DB Stack - V 4.7

PostgreSQL DB Stack

The PostgreSQL db stack is used to build and deploy PostgreSQL database in dataspaces and produce deployment-ready containers for the CloudOne environment.

PostgreSQL (also referred to as Postgres) is an open-source relational database management system (RDBMS) emphasizing extensibility and standards compliance. It can handle workloads ranging from single-machine applications to Web services or data warehousing with many concurrent users.

The containers produced in this db stack share common requirements with other application and db stacks deployed to the CloudOne environment. These shared requirements include:

- Security scanning for any vulnerabilities

- Staging of the db container in the appropriate Docker container repositories and tagged according to CloudOne conventions

- Completion of Unit Testing and User Acceptance Testing

- Gated approvals before allowing deployments into pre-production and production environments

- Audit trail and history of deployment within the CloudOne CI/CD Pipeline

Because of the above-listed requirements, the PostgreSQL db stack is provided in order to support the build and deployment of PostgreSQL database in a manner that integrates with CloudOne requirements and processes. The flow of deployment includes first a Continuous Integration stage of processing in a pipeline prior to deployment in the Continuous Deployment stages. The Continuous Integration stage focuses on building the application, running scans to security vulnerabilities, and staging the container in the appropriate Docker repository ready for deployment. Subsequent pipeline stages deploy the application to the appropriate target Kubernetes spaces.

Getting Started in the Azure DevOps environment

Refer to the following link to learn about getting started in the Azure DevOps environment: Getting Started in Azure DevOps Environment

Repository Structure

The structure of the repository for the PostgreSQL db stack will contain a conf directory with few sub directory structures for custom config files, and one or two directory trees with details for deployment, as follows:

- conf - this directory will contain directory stucture to place custom configuration files.

- appCode-serviceName – (named as the AppCode followed by the application or service name) contains the Helm chart deployment materials if Helm v2 charts are used (this is the default)

- manifests - contains Kustomize manifests if Kustomize overlay files are used as an alternative to Helm charts

Also at the top of the repository is a file called azure-pipelines.yml. This file contains reference to the appropriate version of the CI/CD pipeline logic, some variables unique to the application (e.g. container version) as well as YAML data structures providing key information about the environments into which to deploy the application and the sequence of events to complete the deployment (e.g. dependencies, additional steps to retrieve secrets to be passed to the deployed container, etc).

Additional items in this repository will generally not be modified and should not be changed to avoid risk of breaking the pipeline workflows.

Database Configuration into the CloudOne CI/CD Pipeline

Once navigated to the appropriate repository, it can be cloned to a local development environment (i.e. local workstation) for the actual software development and configuration work for the PostgreSQL database to be deployed. Configuration of the pipeline would include setting the appropriate container version (reflected in the appVersion variable under the parameters: section) and defining the target workspace and dataspace through template: under extends:. Once it is ready to be deployed into the CloudOne workspace and the new application version has been set as well, create a Git pull request for the updates to the code. The creation of this pull request will trigger the Continuous Integration pipeline to start.

Continuous Integration and Continuous Delivery Pipelines

Please note that the document “CloudOne Concepts and Procedures” contains more details about the specific flow of the application through the CI/CD pipelines

Once a pull request has been created against the Git repository, it will trigger the start of the CI/CD pipeline in order to build, scan and prepare the application to deploy into the Workspace. Although the CI/CD pipeline flow is automatically triggered, there are times when it needs to be manually run (or re-run) and the pipeline should be examined for the results of its preparation of the application and deployments. Details for examining the CI/CD pipeline and analyzing its results can be found here: Continuous Integration and Continuous Delivery Pipelines

Pipeline Definition: azure-pipelines.yml file

The logic for the v4.3 PostgreSQL CI/CD pipeline is a common and shared code base for all applications; however the configuration of the pipeline that applies the common logic to the specific application is defined in the top level directory of the source code repository for the application in a file named azure-pipelines.yml.

The structure of the azure-pipelines.yml file is a YAML file using standard YAML syntax. For general information about YAML structures, there are many available resources, including the tutorial at this link: YAML Tutorial.

There are certain required data elements that must be defined within the azure-pipelines.yml file as a prerequisite to the CI/CD pipeline running while other elements are optional and used to modify the standard behavior or the CI/CD pipeline.

More details about the azure-pipelines.yml file can be found here: General Structure of the azure-pipelines.yml File

Configuration and Customization of the PostgreSQL Pipeline

Global Variables

The variables YAML object defines simple variables accessible across the pipeline logic. For PostgreSQL pipelines, there is one required variable:

extends:

parameters:

appVersion: <version>which specifies the version of the container to be pulled from Artifactory. The name of the container will match the name of the application defining the pipeline.

Database-Specific Pipeline Configuration

The extends YAML object is a complex object consisting of additional YAML objects. This object is used to extend the v4.3 pipeline logic (referenced by the repository defined in the resources object) by (a) referencing the correct dbstack pipeline entry point (dbaas/devexp-postgresql.yml@spaces for the PostgreSQL pipeline) and (b) passing a set of YAML objects as parameters to influence the behavior of the pipeline to meet an application teams specific needs.

The extends YAML object consists of 2 objects beneath it:

- template

- parameters

The template YAML object is a single value set to the initial entry point for the V4 pipeline for the MongoDB dbstack, so it should always be defined as follows:

variables:

- { group: postgresql-<dataspace repo name>.props }

resources:

repositories:

- { repository: templates, type: git, name: devexp-engg/automation, ref: release/v4.7 }

- { repository: spaces, type: git, name: spaces, ref: dbaas/v1.5 }

extends:

template: dbaas/postgresql-<dataspace repo name>.yml@spacesThe parameters YAML object is defined immediately following the template object and at the same indentation level. This is the object that requires the most attention and definition to be set up.

Pipeline modes: refresh, backup, backup-list and restore

along with deployments pipeline v4.3 enables to run backups, list available backups and restore a backup.

parameters:

- {

name: mode,

displayName: "Select action to perform",

type: "string",

values: ["refresh", "backup", "backup-list", "restore"],

default: "refresh",

}

- { name: restoreFrom, displayName: "Provide source to restore from", type: string, default: "<dataspace repo name>" }

- { name: backupId, displayName: "Provide backup id to restore from", type: string, default: "latest" }

- { name: restoreFromSgrid, displayName: 'Restore from NPC Sgrid ?', type: 'string',values: ['yes','no'], default: 'no' }Default mode to run the pipeline is refresh and can be changed as per the requirments. Values for restorefrom and backupId are required only during restore operations.

Backup schedule

Pipeline v4.3 enables to run scheduled backups within azure-pipelines.yaml. (Optional) schedules:

- cron: "05 04 * * *"

displayName: "Daily at 04:05 AM"

branches:

include:

- <branch-name>

always: truePlease note that schedule must be defined in the master branch. You can refer this site Crontab to build your cron schedule.

Additional Configurations

Support for Kong

Support for Kong has been introduced since V4.7. Kong needs to be enabled in values.yaml and any override values files:

kong:

tls:

secret:

dbaas:

enabled: true

domain: PLEASE NOTE: Ambassador and Kong both can not be enabled at once. You can select either but ONLY one at a time.

New file under helm template tcpingress.yaml is added to support Kong.

How to adjust the CPU request for the database pods?

By default database pods will start with default CPU size mentioned in the spaces file. To override this value to save the CPU usage, use the following section in the azure-pipelines.yaml

extends:

parameters:

appVersion: <version>

postgresql:

podSpec:

cpuRequests: 200m For the existing dataspace, review the splunk report and set the value 2 or 3 times of max usage. For example, if the max CPU usage for the database pod is "50m" then set the "cpuRequests" value as "150m".

You need to review the splunk reports periodically and tune the "cpuRequests" value.

How to configure and deploy database in dataspace?

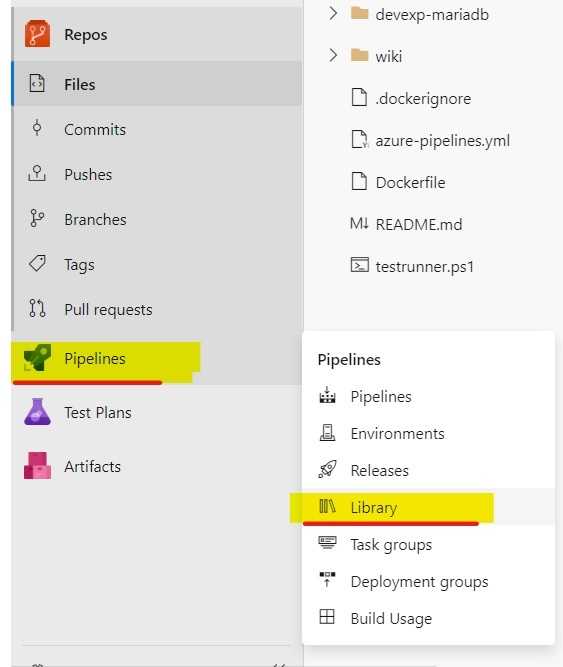

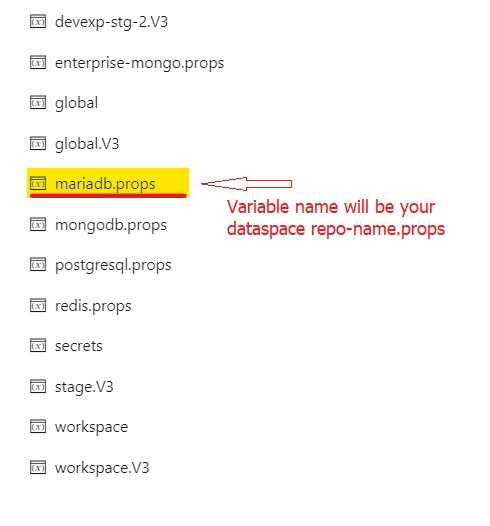

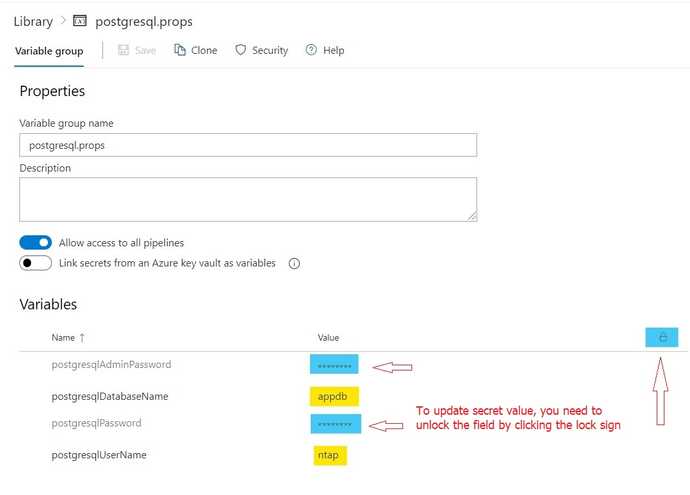

Database will be automatically configured with random password during provision. To modify the details of postgresql passwords, you need to update <repo-name>.props variable group in pipeline library.

Update azure-pipelines.yaml to include helm override files for deployment

To override values for dataspace, you can update the following section in azure-pipelines.yaml

workspace:

helm:

overrideFiles: |

dbaas-postgres1/values.workspace.yaml

dataspace:

helm:

overrideValues: |How to enable database backup?

Backups using DBaaS self-service option is discontinued for version 4.3, please refer to backup and restore document.

How to change database user password?

- Update the password in props variable group and send it to ops team.

- Ops team need to update the password value in props variable group located in pipeline library.

- Update the release pipeline and modify the download secret file task and select the uploaded secret file from dropdown list.

- Create the release to update the rancher secrets.

- Now the DB pod got recreated with new password. Login to database using new password and check.

Click the below image to watch the video

Exposing databases outside of rancher/Kubernetes cluster.

See our documention on CloudOne Stunnel Client

System-level Monitoring with Sysdig: Performance and Troubleshooting Insights

Sysdig is a powerful monitoring and troubleshooting tool that provides deep visibility into system-level metrics and events. It allows you to monitor and analyze the performance of your systems and databases, by capturing and analyzing system-level metrics such as CPU usage, memory consumption, disk I/O, and network traffic. With Sysdig, you can identify database-related processes, monitor resource utilization, analyze system events, and create custom metrics and alerts. Overall, Sysdig is a versatile tool that can help you gain a better understanding of the performance and health of your databases and the underlying systems they rely on.

Sysdig exporter can be enabled to facilitate monitoring and data collection using Sysdig. By updating the values.dataspace.yaml file as following.

## Flag to enable sysdig exporter

exporter:

enabled: trueDR changes

With the introduction of pipeline v4.7, we have implemented DR capabilities that require changes in the Helm chart. Please refer to the document below for the necessary modifications and kindly update your Helm chart accordingly.

https://selfassist.cloudone.netapp.com/docs/getting-started-with-dr?v1#db-stacks-only

Detailed Pipeline Configuration

The remainder of the configuration work in the azure-pipelines.yml file focuses primarily on defining the target workspace and dataspace for the PostgreSQL database and providing details for these spaces, any additional tasks needed to prepare the deployments, and dependencies that will dictate the sequence of deployments in these spaces beyond the established Cloudone environment deployment approval processes. Details for configuring these elements of the pipeline can be found here: Pipeline Configuration Details

Kubernetes Deployment Objects

In order to deploy an application a number of Kubernetes objects must be defined and deployed. The definition of these objects is controlled through a set of files in one of two forms: Either as Helm charts (the default method) or as Kustomize files (a newer form of deployment description which has limited support in V4). Information about the contents and customization of these Kubernetes deployment objects can be found here: Kubernetes Deployment Objects

Troubleshooting

If something fails to deploy, the information about why the deployment failed (or was not even initiated) will be found in the logs of the CI/CD pipeline and can be tracked down using the methods described earlier in the “Continuous Integration and Continuous Delivery Pipelines” section.

However, additional information may be required either to better troubleshoot a failed deployment or to investigate the runtime behavior of an application that has been successfully deployed. In those cases, much of the information can be found in the rancher web console. Information about navigating and analyzing information from the rancher web console can be found here: Navigating the rancher Web Console